The first prize at the recently-held 2022 Colorado State Fair’s art competition in the digital category controversially went to a work created using Midjourney, an AI-powered text-to-image generator. Artists were understandably upset but I would argue that society at large should welcome such a development.

A number of people seem to think that using AI to produce art is tantamount to cheating because it supposedly devalues the human effort artists put into creating art, specifically the years and years spent honing their craft, id est, committing brush stroke technique to muscle memory, getting an almost instinctive intuition of color theory, et cetera. As I have stated before, AI is so effective because machine learning models can simulate years of human practice into several hours of continuous training. This means that given enough data and compute, AI will always eventually overtake humanity when it comes to reliably delivering perfect — nay superhuman — technique, including that which is used when it comes to crafting art.

The cutting edge is called state of the art for a reason.

Given this fact, one possibility is to separate AI-augmented digital art from those that purely use what are considered standard (as of the time of this writing) tools such as digital artists’ favorite Adobe Photoshop, limited to a certain version. Ironically, nearly all software companies have been racing to incorporate AI into their products — Adobe has touted Sensei’s artificial intelligence and machine learning models as an integral feature of Photoshop moving forward, thereby making AI tools ubiquitous and requiring artists to use outdated versions of their software if they wish to to avoid AI altogether. By creating distinct categories based on how advanced the tools digital artists use are, we can ensure that artists compete on the same playing field. An AI classification model can be trained to differentiate between human-created digital art and image model outputs from Midjourney, Stable Diffusion, and DALL-E to enforce these categories.

More importantly though, there is much more to art than craft. Craft may involve putting in hours to develop technique, but it can be argued that taste — the ability to differentiate true art and envision such a project — is more valuable.

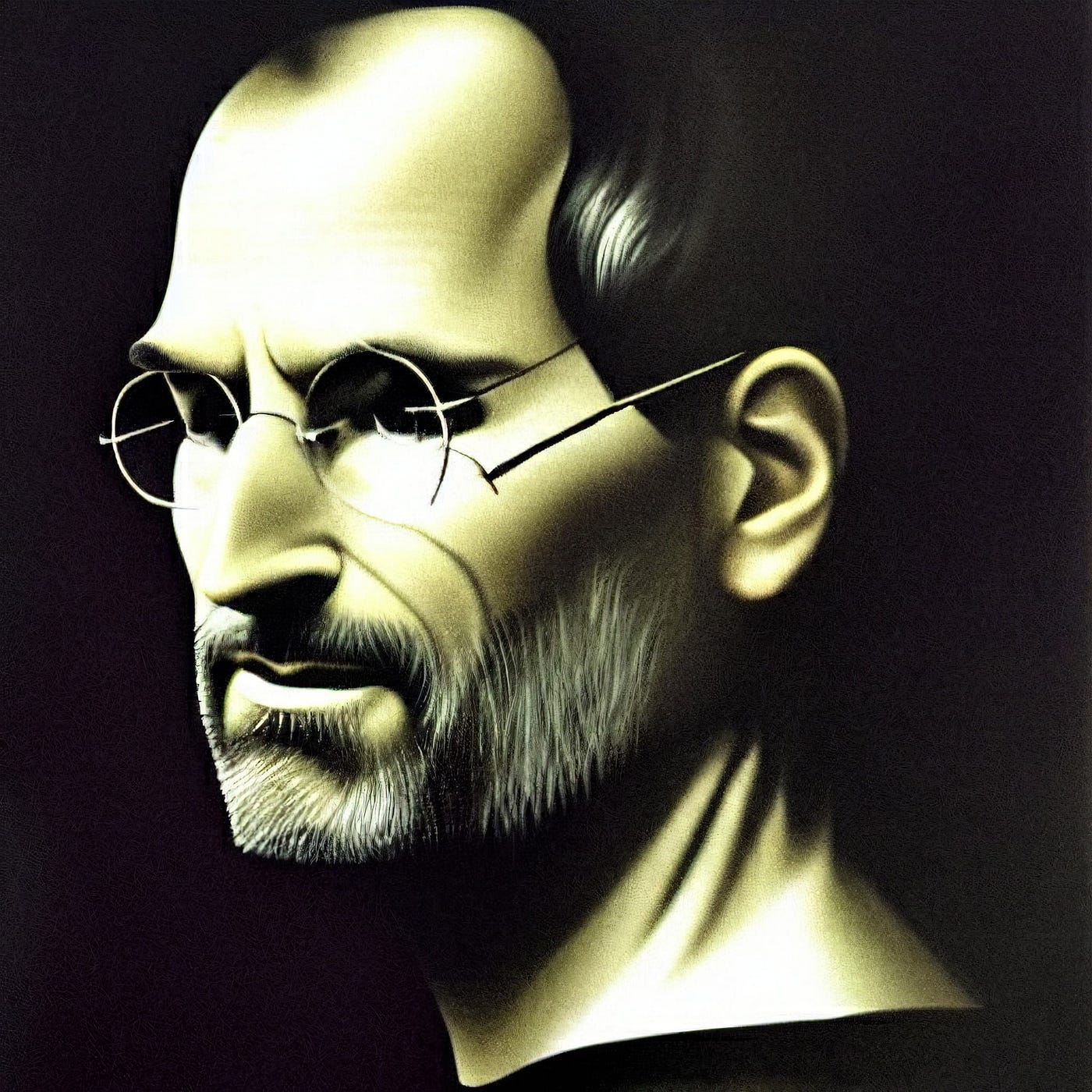

It is essential to note that Midjourney, along with other AI image generators in vogue such as Stable Diffusion and DALL-E, is a text-to-image model which means that users still need to specify text prompts. People do not simply press a button to create art, unlike how some people have been misinformed. Text-to-image models take in text prompts, such as “chiaroscuro masterpiece portrait of Steve Jobs by Caravaggio”, and require tuning certain parameters such as a guidance scale to generate an image based on the prompt.

Today’s state of the art text-to-image AI models use a neural network architecture to convert text inputs to images. This line of research originated from image captioning models, which take images inputs and generate appropriate descriptive captions. Computer scientists then attempted to build a model that can do the reverse — a model that can take in descriptive text inputs and output the appropriate image. While image search algorithms on search engines such as Google use keywords to select appropriate images from a universe of existing images on the Internet based on something like a similarity score, text-to-image models instead start with an image of random noise and algorithmically modify it to generate the image that maximizes its similarity to the provided text prompt. The datasets used in training these models, such as Stable Diffusion’s LAION-5B dataset, comes from image-text-pairs scraped from the Internet, leading to idiosyncrasies such as celebrity social media handles working as stand-ins for their actual names.

Crafting text prompts is therefore a science and an art(!) unto itself — there are numerous scientific papers published on the nuances of prompt engineering.

Given this, artists who use text-to-image models such as Midjourney still have an active role to play in creating art. As I have written previously, corporate workers of tomorrow must take on more of a managerial mindset as AI automates an increasing number of mundane operational tasks. The same is true in art: the artists of tomorrow must take on more of an editorial mindset and guide AI as it takes care of tasks requiring craft and technique.

Many of history’s most celebrated artists — from Picasso to Duchamp to Warhol — have embraced the idea of found art. For the uninitiated, found art, also known as found objects or ready-mades, are existing objects that are selected by artists and are recognized as art by merit of having been identified as art by an artist. Found art does not have to be crafted by an artist; instead, the artist’s contribution comes from possessing the tasteful eye to identify found art as art. Found art is an exceptional case of art emerging from taste rather than craft. AI-generated art is not as extreme as found art — users still need to craft text prompts and refine model parameters — but is just as valid as art.

The great artist Michaelangelo said it best: “Every block of stone has a statue inside it and it is the task of the sculptor to discover it.” In the olden days, this required artists to hone their technique in the use of tools such as chisels and brushes. As recently as two decades ago, computers have turned these tools digital as artists began using software such as Photoshop and Blender. It should therefore come as no surprise that artists today use AI-powered tools to produce award-winning artwork. The cutting edge is called state of the art for a reason.

Of course, given sufficient data points (art and their judged scores) from competitions, we can always just build an AI model that creates the optimal artwork to win a competition, but this just replaces the human input away from text prompts to judges’ scores. This then would be the state of the art of found art, until the next advancement comes along.

This article was also posted on Medium.